A Chip Simulating Itself

GPT-5.3-Codex-Spark is a smaller version of Codex optimized for fast inference. Powered by the Cerebras Wafer-Scale Engine, it runs at over 1,000 tokens/s—the same chip that runs OpenAI’s GPT OSS 120B at 3,000 tokens/s. I used it to build an interactive simulator of that very chip. The hardware helped explain itself—a recursive loop of silicon and AI.

This isn’t just meta for fun. The infra behind this is coming in screaming hot—directly from the surface of the sun—but it’s a cool glimpse into what will become mainstream in the next few months. And when your hardware is literally sparking at thousands of tokens per second…

…this one sparks joy ✨

Why Wafer-Scale?

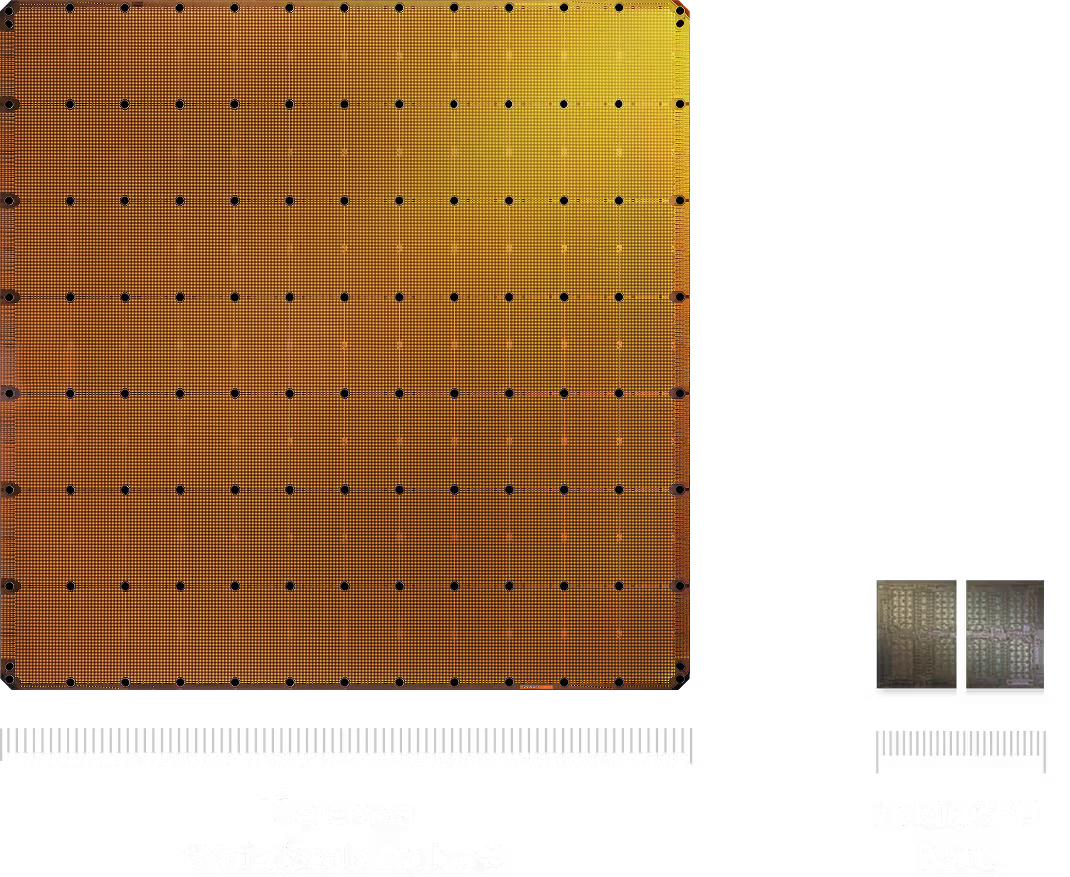

Look closely at the Cerebras chip—you’ll notice a grid-and-holes pattern covering the entire surface. That’s because wafers this large are normally diced into dozens of separate chips. Cerebras instead etches one giant chip across the entire wafer.

Why? On-chip memory. A wafer-scale design can pack 44GB of SRAM directly into the chip. Standard GPUs have tens of megabytes. That difference matters: inference typically spends significant time streaming model weights from external memory into the GPU. With enough SRAM to fit the entire model, those transfers happen at SRAM speed instead—yielding a 15x speedup.

The Problem: 900,000 Cores, Guaranteed Defects

When you manufacture ~900,000 cores on a dinner-plate-sized chip, defects aren’t a matter of if—they’re how many. The Cerebras approach: assume defects will happen, then route around them intelligently.

But how do you explain defect-tolerant mesh routing to someone who’s never thought about chip yields?

As a Cerebras Fellow and Codex Ambassador, right after Codex-Spark launched, I wanted to build something immediately graspable—a single interactive page where you adjust defect rates, run remap logic, and watch packets reroute in real time.

The tight iteration loop (prompt → verify → refine) made it possible to clarify both the explanation and the routing logic until everything clicked.

This simulator is the result. It was built through prompting Codex-Spark and references these public sources:

- Cerebras 100x defect tolerance post

- IEEE Micro: Path to Successful Wafer-Scale Integration

- Cerebras WSE architecture whitepaper

Codex-Spark is also powered by Cerebras’ Wafer Scale Engine 3, a purpose-built AI accelerator for high-speed inference that gives the model a latency-first serving tier. That is why this post is able to move from idea to working interaction so quickly.

What the Simulator Shows

The simulation has three practical layers:

- A tile mesh: simplified compute regions connected by a mesh network.

- Fault controls: manufacturing defects, inter-tile link defects, remap budget, and reliability-run count.

- Packet flow + remap: route search around blocked regions and optional repair conversion using spare capacity.

Each render reports:

- healthy vs defective/repaired tiles

- bad links and blocked connectivity

- route found / blocked outcomes

- path length and a reliability summary from repeated random trials

That output mirrors the core lesson from wafer-scale systems: local defects are manageable when routing options and spare resources are intelligently coordinated.

Try It

The simulator is embedded below and uses client-side JavaScript only. Start with the defaults, then experiment.

Default settings: manufacturing tile defects 12%, inter-tile link defects 6%, spare-core remap budget 7%, and 40 yield simulation runs.

Then try:

- Disable remap to see failure impact.

- Increase link defects and compare route resilience.

- Turn mesh links off and compare observability.

How It Was Built: The Real-Time Codex-Spark Workflow

This project is as much about the workflow as it is about hardware simulation. Building with Codex-Spark felt genuinely different from normal coding cycles.

What Makes It Feel Different

- Ask, don’t script: I could just ask for concrete changes like “add a spare-core budget slider” or “make the status line conversational,” and get it immediately.

- Ship faster, learn faster: each iteration was visible in seconds, so wrong assumptions were corrected in minutes, not a full afternoon.

- Real-time stays real-time: the UI updates and simulation loops stayed fluid while iterating—no waiting, no lag.

- You can ask for features, not just code: examples like source-note cards, clearer beginner text, and route status semantics were all handled the same way through conversation.

The Latency Breakthrough That Enables This

On agentic software engineering benchmarks like SWE-Bench Pro and Terminal-Bench 2.0, Codex-Spark produces more capable responses than GPT-5.1-Codex-mini while completing tasks in a fraction of the time. It excels at precise edits, plan revision, and contextual questions about your codebase—exactly the tight loop this simulator needed.

As OpenAI trained Codex-Spark, they realized model speed was just part of the equation for real-time collaboration. They implemented end-to-end latency improvements that benefit all models:

- 80% reduction in overhead per client/server roundtrip

- 30% reduction in per-token overhead

- 50% reduction in time-to-first-token

Under the hood, they streamlined how responses stream from client to server and back, rewrote key pieces of the inference stack, and reworked session initialization. Through a persistent WebSocket connection and targeted optimizations inside the Responses API, Codex stays responsive as you iterate.

This is why “ask for a feature, see it work, ask for the next pass” actually works in practice—the latency is low enough that the conversation never breaks your flow.

The Loop Closes

A chip that runs AI fast enough to explain itself in real time. A simulator built in minutes instead of weeks because the latency finally disappeared. Hardware teaching its own architecture through the models it accelerates.

This is the recursion we’re entering: the infrastructure gets fast enough that building on it feels instant, so we build tools that explain the infrastructure, which makes the next person faster, which pushes the infrastructure further.

The wafer isn’t just running the model. It’s teaching you how it works while you use it. And that feedback loop—silicon to software to understanding and back—is what sparks next.